William Stopford

Which SUV should you buy for towing?

15 Hours Ago

Nvidia doesn’t just build graphics cards for avid gamers, and has become a significant supplier for the car industry.

Contributor

Contributor

The name Nvidia comes from the Latin word for envy, ‘invidia’, and indeed some companies may be envious of the firm’s dominance – alongside AMD – of the consumer graphics processing unit (GPU) market.

Established in 1993 by Jensen Huang (still the company CEO), Chris Malachowsky and Curtis Priem, Nvidia is well known for producing hardware that helps run PC and console games.

The fundamental semiconductor and silicon technologies used in these graphics cards can have a wide variety of other applications, however. One of these is to drive the computing systems that allow for ADAS (advanced driver assistance systems) and autonomous driving and correspondingly, Nvidia has made significant investments and progress in this area.

Today, Nvidia’s offerings in the autonomous vehicle and ADAS space can be grouped into four categories. These comprise software testing and development environments for autonomous vehicles, self-driving hardware and software, as well as a near turnkey self-driving platform that incorporates the above products into a complete solution that carmakers can buy to add automated driving features to their car.

Not all companies have access to the resources needed to test autonomous vehicles in real-life physical environments, and there may be numerous regulatory and safety hurdles that may also prevent them from doing so.

Therefore, many original equipment manufacturers (OEMs) and associated companies choose to test their self-driving and ADAS hardware in a virtual environment before hitting the road to make sure the fundamental principles work in theory.

Many autonomous and ADAS systems also rely on the development of neural networks, which can recognise various objects on the road, including cars, pedestrians and animals, and predict the path that they will take. However, to ‘train’ these networks to work accurately, they require substantial sources of data input, including test images and videos.

Nvidia offers two solutions to meet both of the needs described above. Nvidia’s Drive Infrastructure includes the supercomputer hardware, software and associated workflows to help OEMs and other companies train their ADAS and autonomous driving neural nets, and includes system such as the Nvidia DGX SuperPOD that acts as a turnkey supercomputer that companies can use to test these systems.

Additionally Nvidia also offers its Drive Sim, which the brand claims provides a physically accurate simulation platform that includes technologies such as the ‘Neural Reconstruction Engine.’

This aims to bring real-word data directly into the simulation, by making it easy to replicate recorded drives from a fleet of suitably equipped vehicles within the simulation.

Apart from providing OEMs and other developers access to resources to virtually test their ADAS and autonomous driving systems, Nvidia also develops processing hardware that can be used within the car to power these systems.

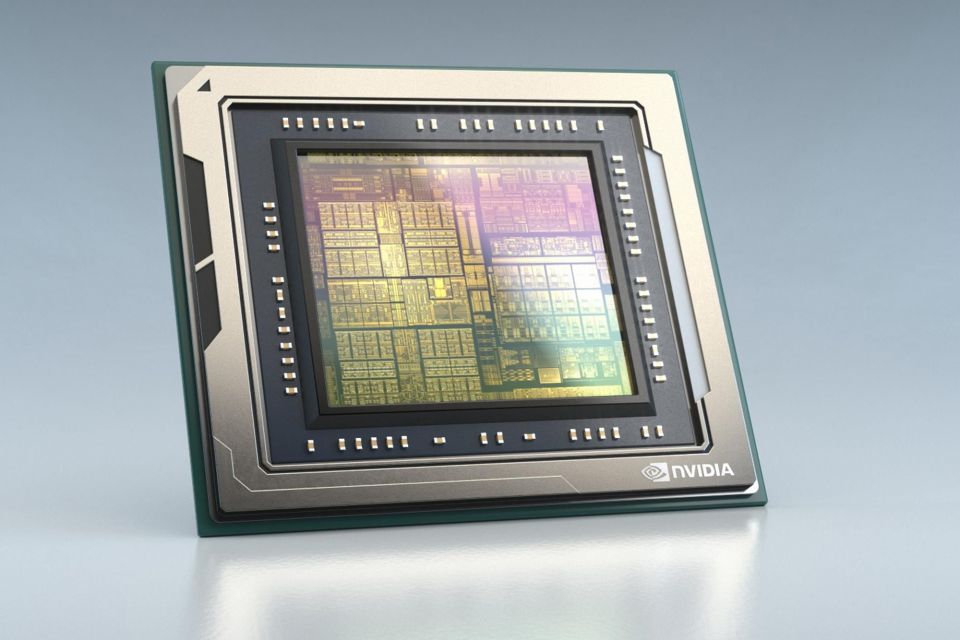

These are known as SoCs, or system on a chip, and integrate the CPU (Central Processing Unit), GPU, RAM and other components on a single chip.

Nvidia’s Drive Orin is the brand’s most powerful SoC for autonomous driving currently available, and production commenced in March this year after being first announced in December 2019.

The complany claims this SoC can perform up to 254 trillion operations per second, and uses 17 billion transistors to be seven times as powerful as its previous Xavier SoC for advanced driver assistance systems. Moreover, the brand claims that the use of multiple Orin SoCs allows OEMs to scale their ADAS and autonomous driving systems from Level 2 to fully autonomous Level 5 systems.

More recently, Nvidia announced its Drive Thor SoC, expected to be available in vehicles being produced from 2025. The company claims this represents a significant leap in computing performance over the current Drive Orin, with a total performance of up to 2,000 teraflops of performance.

Perhaps just as significantly, Nvidia claims the Thor is sufficiently capable to also power in-cabin infotainment systems and digital instrument clusters, as well as other interior functions which today are distributed between multiple different processors.

Accordingly, the company says that an OEM in the future may be able to cut costs by allocating a portion of Thor’s computing power to support these interior functions (removing the need for separate chips), and the remainder to autonomous driving systems.

While it is relatively easy for an OEM to buy powerful computing hardware off-the-shelf and include it in their latest models, what is perhaps more difficult is developing software that can effectively take advantage of these systems to provide customers with reliable, safe and effective ADAS and autonomous driving systems.

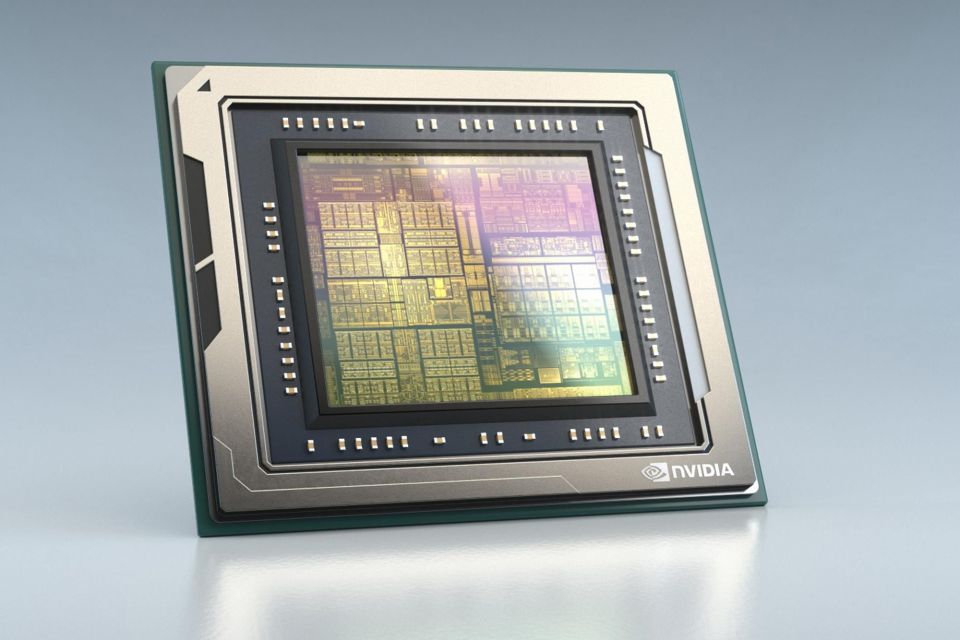

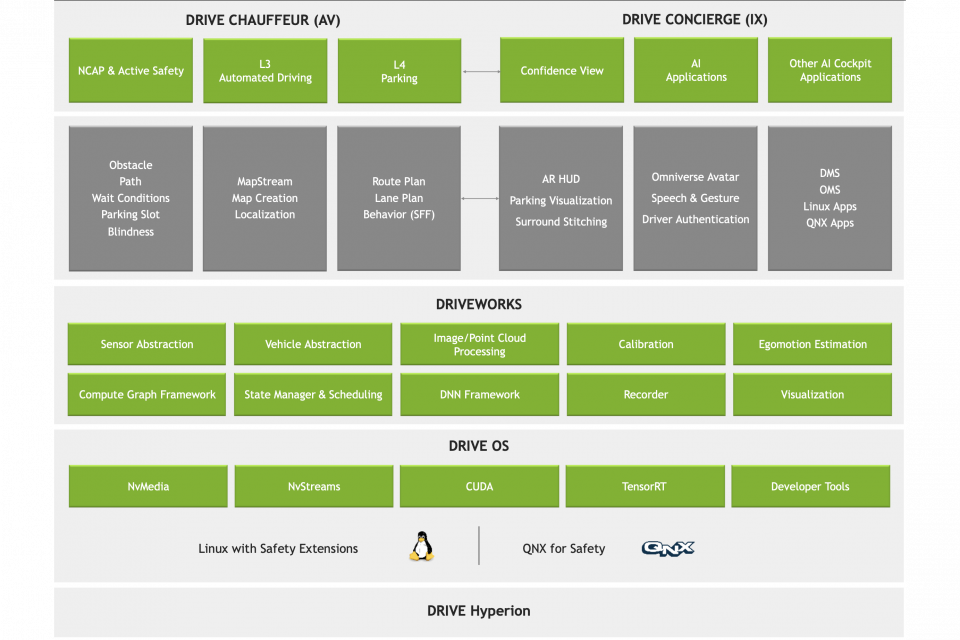

Alongside hardware, Nvidia also offers suitable software to take advantage of the SoCs that it has developed, as well as process inputs from other sensors such as radar, LiDAR and cameras.

The foundation for this is the company’s Drive OS, which is a reference operating system that interfaces closely with hardware such as the Orin and upcoming Thor SoCs. On top of this, Nvidia also offers software ‘layers’ such as DriveWorks, that act as ‘middleware’ and include components such as a sensor abstraction layer that can take inputs from different types of vehicle sensors.

The company has also developed a Drive Chauffeur software layer that incorporates a variety of neural networks to incorporate perception, mapping and planning functions. These help the car to estimate distances, and to detect and track objects, and also control vehicle functions such as acceleration, braking and lane positioning.

Due to regulatory and safety restrictions, certain ADAS systems also require the driver to continue monitoring the road ahead in order to function. To support this, Nvidia also offers its Drive Concierge software that incorporates artificial intelligence and other technologies to support driver and occupant monitoring using the car’s interior cameras and other interior sensors.

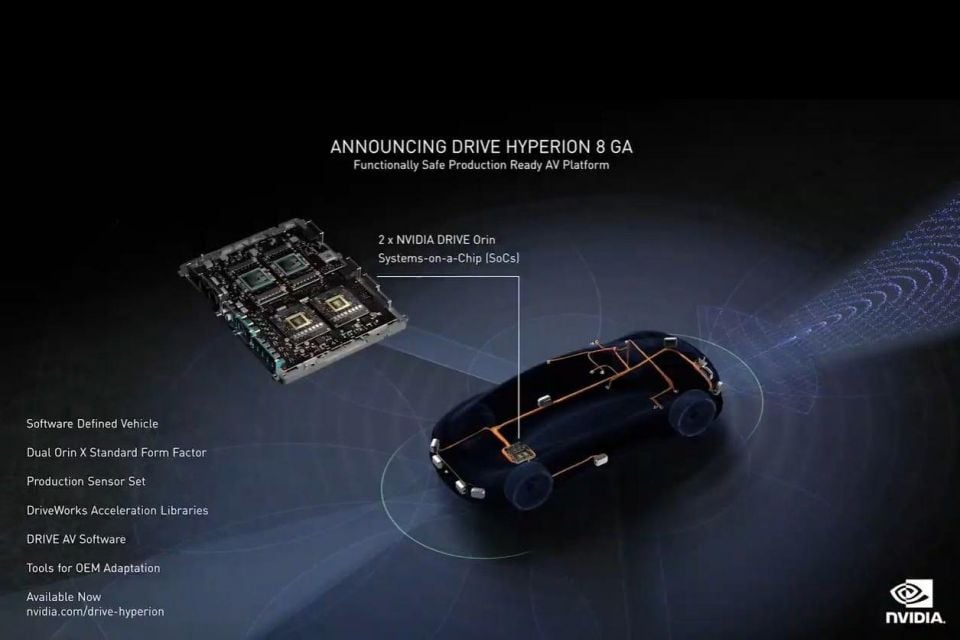

It is possible for OEMs and other suppliers to purchase just one, or a few, of the components that Nvidia has developed above, and integrate it into systems from other suppliers or those that have been built in-house. However, Nvidia also offers a largely complete self-driving platform that incorporates all of these components into a unified solution. This is known as Nvidia’s Drive Hyperion.

The company describes Hyperion as an end-to-end, modular development platform and reference architecture for designing autonomous vehicles, and incorporates Orin hardware and the software described above. In the current Hyperion version 8, it can support up to 12 exterior cameras, three interior cameras, nine radar sensors, 12 ultrasonic sensors as well as up to two LiDAR sensors.

A range of carmakers have announced they will be adopting Hyperion for their future vehicles. This includes Lucid’s DreamDrive Pro ADAS system (to be included on the Lucid Air), some BYD electric vehicles from 2023 production and Jaguar Land Rover vehicles to be released after 2025. Meanwhile, the upcoming Polestar 3 and Volvo EX90 SUVs will also use components from Nvidia’s Drive range of products.

Read the rest of the Inside the Suppliers series here:

William Stopford

15 Hours Ago

William Stopford

15 Hours Ago

Derek Fung

15 Hours Ago

Anthony Crawford

15 Hours Ago

Matt Campbell

23 Hours Ago

Damion Smy

2 Days Ago

Add CarExpert as a Preferred Source on Google so your search results prioritise writing by actual experts, not AI.