Damion Smy

Toyota Yaris Hybrid updated in Japan ahead of expected Australian arrival

39 Minutes Ago

Carmakers have adopted two approaches to their advanced driver assistance systems: relying on cameras only, or a multitude of sensors.

Contributor

Contributor

One of the fiercest areas of competition in the automotive industry today is the field of advanced driver assistance systems (ADAS) and automated driving, both of which have the potential to significantly improve safety.

Building on these technologies, a completely autonomous, Level 5 car could provide game-changing economic or productivity benefits, such as a fleet of robotic taxis that would remove the need to pay wages to drivers, or by allowing employees to work or rest from their car.

Carmakers are currently testing two key approaches to these ADAS and autonomous driving systems, with interim steps manifesting as the driver-assist features we see and use today: AEB, lane-keeping aids, blind-spot alerts, and things of that note.

MORE: How autonomous is my car? Levels of self-driving explained

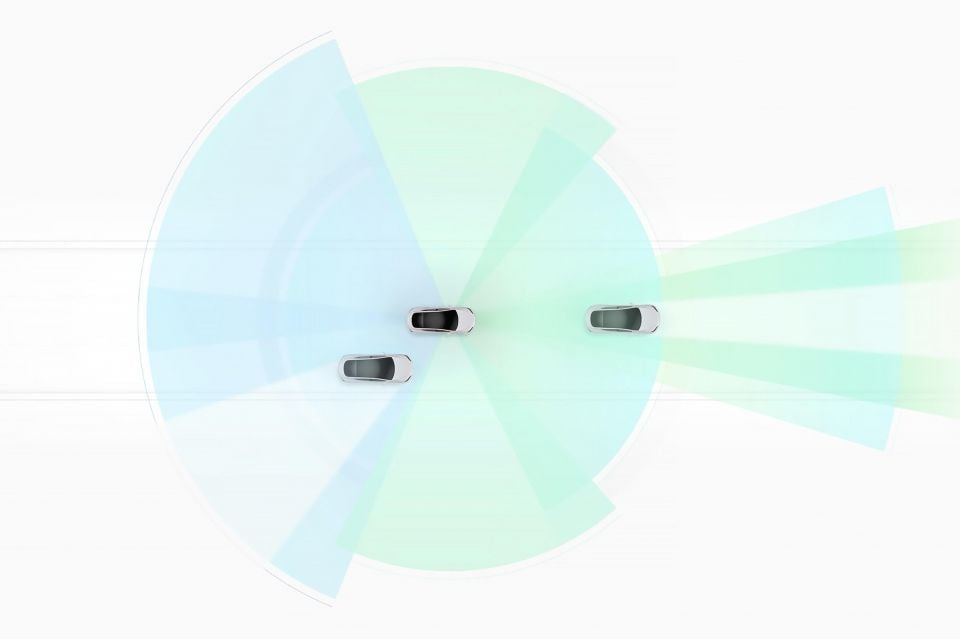

The first approach relies solely on cameras as the source of data on which the system will make a decision. The latter approach is known as sensor fusion, and aims to combine data from cameras as well as other sensors such as lidar, radar and ultrasonic sensors.

Tesla and Subaru are two popular carmakers that rely on cameras for their ADAS and other autonomous driving features.

Philosophically the rationale for using cameras only can perhaps be summarised by paraphrasing Tesla CEO Elon Musk, who has noted that there is no need for anything other than cameras, when humans can drive without the need for anything other than their eyes.

Musk has elaborated further, by mentioning that having multiple cameras thereby acts like ‘eyes in the back of one’s head’ with the potential to drive a car at a significantly higher level of safety than an average person.

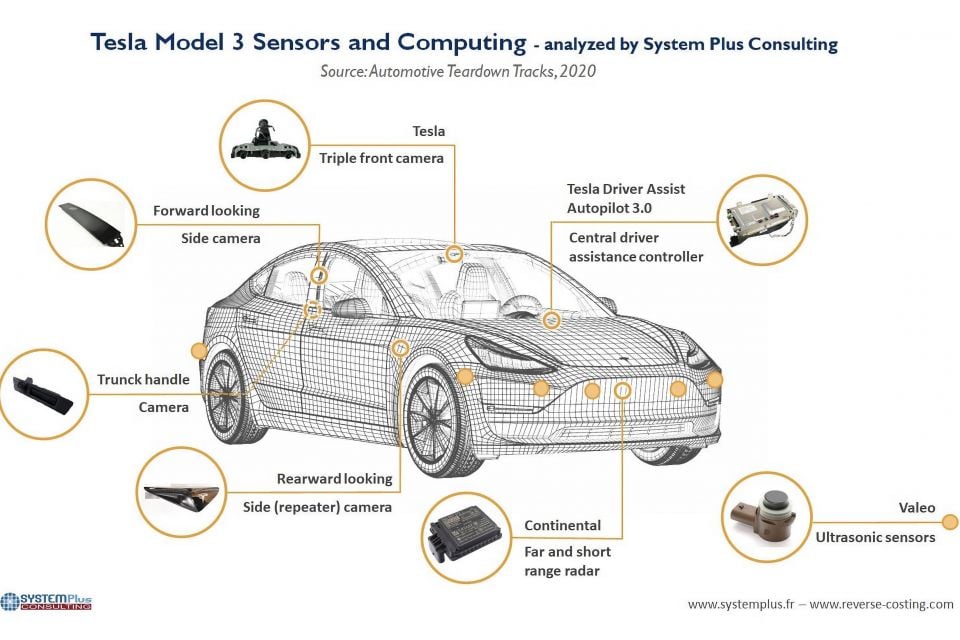

Tesla Model 3 and Model Y vehicles on sale today correspondingly offer a sophisticated setup consisting of eight outward-facing cameras.

These consist of three windscreen-mounted forward facing cameras, each with different focal lengths, a pair of forward looking side cameras mounted on the B-pillar, a pair of rearwards looking side cameras mounted within the side repeater light housing, and the obligatory reverse-view camera.

Subaru meanwhile, uses a pair of windscreen mounted cameras for most versions of its EyeSight suite of driver assistance systems, with the latest EyeSight X generation, as seen in the MY23 Subaru Outback (currently revealed for the US but arriving here soon), also adding a third wide-angle greyscale camera for a better field of view.

Proponents of these camera-only setups claim that the use of multiple cameras, each with different fields of view and focal lengths, allows for adequate depth perception to facilitate technologies such as adaptive cruise control, lane-keep assistance and other ADAS features.

This is without having to allocate valuable computing resources to interpreting other data inputs, whilst also removing the risk of getting conflicting information that would force the car’s on-board computers to prioritise data from one type of sensor over another.

With radar and other sensors often mounted behind or within the front bumper, adopting a camera-only setup also has the practical benefit of reducing repair bills in the event of a collision, as these sensors would not need to be replaced.

The clear drawback of relying only on cameras is that their effectiveness would be severely curtailed in poor weather conditions such as heavy rain, fog or snow, or during times of the day when bright sunlight directly hits the camera lenses. Moreover, there is also the risk that a dirty windscreen would obscure visibility and thereby hamper performance.

However in a recent presentation, Tesla’s former head of Autopilot Andrej Karpathy claimed that developments in Tesla Vision could effectively mitigate any issues caused by temporary inclement weather.

By using an advanced neural network and techniques such as auto-labelling of objects, Tesla Vision is able to continue to recognise objects in front of the car and predict their path for at least short distances, despite the presence of debris or other hazardous weather that may momentarily obstruct the camera view.

If the weather was constantly bad, however, the quality or reliability of data received from a camera is unlikely to be as good as that from a fusion setup that incorporates data from sensors such as radar that may be less affected by bad weather.

Moreover, there is also the risk that only offering one type of sensor will reduce the redundancy available by having different sensor types.

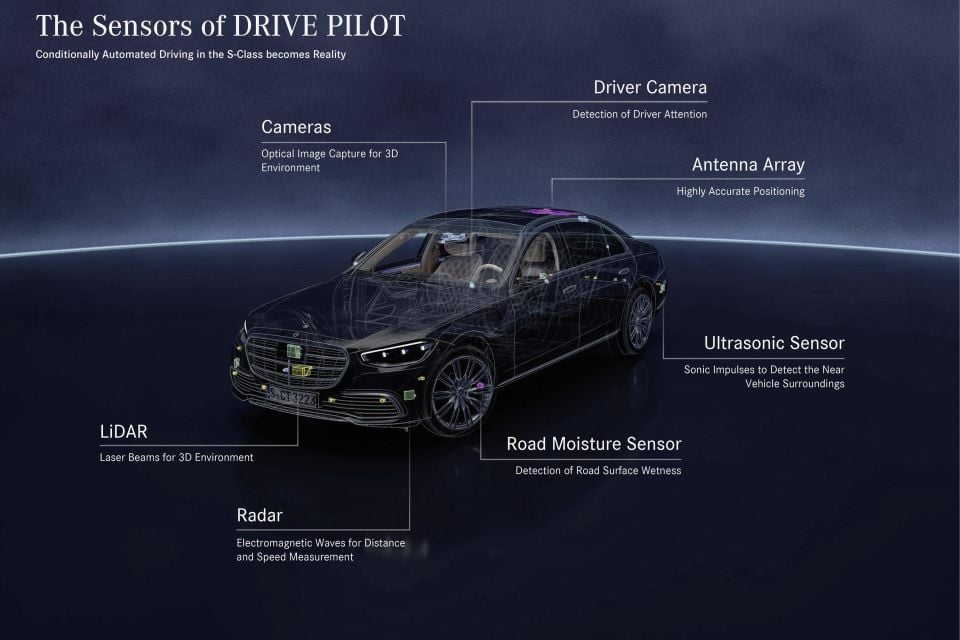

The vast majority of carmakers, in contrast, have opted to make use of multiple sensors to develop their ADAS and related autonomous driving systems.

Known as sensor fusion, this involves taking simultaneous data feeds from each of these sensors, and then combining them to produce a reliable and holistic view of the car’s current driving environment.

As discussed above, in addition to a multitude of cameras, the sensors deployed typically include radar, ultrasonic sensors and in some cases, lidar sensors.

Radar (radio detection and ranging) detects objects by emitting radio wave pulses and measuring the time taken for these to be reflected back.

Consequently, it generally does not offer the same level of detail that can be provided by lidar or cameras, and with a low resolution, is unable to accurately determine the precise shape of an object, or distinguish between multiple smaller objects placed together closely.

However, it is unaffected by weather conditions such as rain, fog or dust, and is generally a reliable indicator of whether there is an object in front of the car.

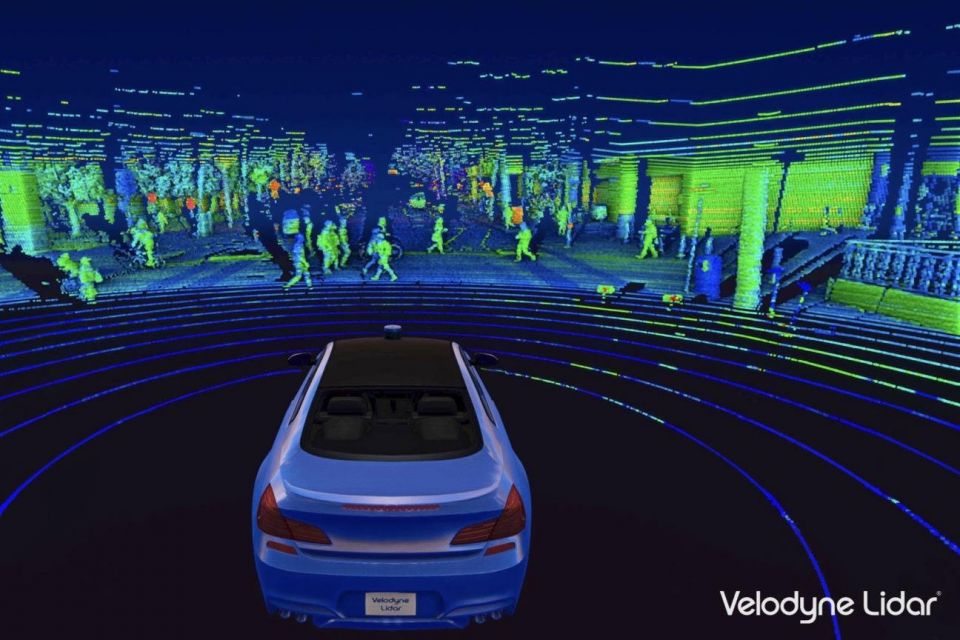

A lidar (light detection and ranging) sensor works on a similar fundamental principle to radar, but instead of radio waves, lidar sensors use lasers. These lasers emit light pulses, reflected by any surrounding objects.

Even more so than cameras, a lidar can create a highly accurate 3D map of a car’s surroundings, and is able to distinguish between pedestrians, animals, and can also track the movement and direction of these objects with ease.

However, like cameras, lidar continues to be affected by weather conditions, and remains expensive to install.

Ultrasonic sensors have traditionally been used in the automotive space as parking sensors, providing the driver with an audible signal of how close they are to other cars through a technique known as echolocation, as also used by bats in the natural world.

Effective at measuring short distances at low speeds, in the ADAS and autonomous vehicle space, these sensors could allow a car to autonomously find and park itself in an empty spot in a multi-storey carpark, for example.

The primary benefit of adopting a sensor fusion approach is the opportunity to have more accurate, more reliable data in a wider range of conditions, as different types of sensors are able to function more effectively in different situations.

This approach also offers the chance of greater redundancy in the event that a particular sensor does not function.

Multiple sensors, of course, also means multiple pieces of hardware, and ultimately this also increases the cost of sensor fusion setups beyond a comparable camera-only system.

For example, lidar sensors are typically only available in luxury vehicles, such as the Drive Pilot system offered on the Mercedes-Benz EQS.

MORE: How autonomous is my car? Levels of self-driving explained

Damion Smy

39 Minutes Ago

William Stopford

2 Hours Ago

Marton Pettendy

4 Hours Ago

Ben Zachariah

5 Hours Ago

Damion Smy

5 Hours Ago

Damion Smy

6 Hours Ago